Learning the React reconciliation algorithm with performance measures

Published: Sun May 03 2020I wanted to measure the summation of all CPU time resulting from a prop (or multiple props) change. This could be the result of an interaction, such as a click or a change in data; and the rendering may also involve multiple components spread out across the page; for example changing from logged out to logged in state. This was especially useful for myself as I wanted to measure the CPU impact of a large restructuring of a number of components - ultimately to answer the question, is the new architecture faster? React 16.6 introduced the React Profiler to enable you to measure how components mount, unmount, update and render using the Chrome Dev Tools performance tab. (Read more about Profiling React Performance). In my case, I wanted to measure React on the production environment using the User Timing API. I have used the User Timing API before for other non-trivial processes, so I thought this is going to be relatively straightforward. Additionally, there is some slight overhead with including the React Profiler on prodution (there is a gist with instructions) and in my situation it felt unnecessary, so I thought that this was a wise choice.

Profiling React Components with the Chrome DevTools Performance Tab

Round 1…

The first step was to understand where to set my performance.mark to indicate the start and end of a render cycle. By following what I already knew of the React component lifecycle, I knew that a component is rendered, after which it triggers the componentDidMount method. Any subsequent change to the components props or state, will trigger an update; which fires the componentDidUpdate method. So I thought that we can use this simple flow render-mount-(render-update)* to place our marks.

In the case of functional components (and React 16.8 onwards), these two methods may be replaced with the React hook useEffect. Based on this, I thought I was able to get an approximate measure of how long each render cycle takes by taking a mark at the start of the render() and at the start of useEffect; using the following simple snippet:

Simple.jsx:

import React, { useEffect } from "react";

// Represents a simple functional component

// ATTN: Incorrectly uses `useEffect` instead of `useLayoutEffect`

export default function Simple({ isHappy, showLogs = false }) {

useEffect(() => {

performance.mark("Simple:Render:End");

performance.measure(

"Simple:Render",

"Simple:Render:Start",

"Simple:Render:End"

);

if (showLogs) {

console.log(

"Simple:Render",

performance.getEntriesByName("Simple:Render")[

performance.getEntriesByName("Simple:Render").length - 1

].duration

);

}

});

performance.mark("Simple:Render:Start");

return (

<div className="container">

<span className="value">{isHappy ? ":)" : ":("}</span>

</div>

);

}

All code is available on Code Sandbox. Simple:Render:Start is marked on each render, while Simple:Render:End is marked on mount or update. In a simpler world, the difference between these two is the time taken for the component to render.

Let’s test it…

I ran my numbers against React Profiler’s onRender and my measurements were (perhaps unsurprisingly) much larger. More importantly, I didn’t have consistency between one render and another. The Profiler would report a decrease while my measure would report an increase.

Time to take a dip…

To understand this, we need to understand how React (referring to v16.0 onwards) performs updates. To grossly simplify the process, React uses a data structure, internally knowns as a fiber. A fiber may be considered an abstraction for a unit of work. Whenever we render a React application, the result is a fiber tree that reflects the current state of the application, conveniently named current. When React handles an interaction or starts processing updates, it takes the current tree and processes these changes on it, resulting in a new updated tree, known as the workInProgress.

A core principle in React (at least so far) is that it doesn’t render a part of the tree, but only once all updates are processed and the entire tree is traversed, then the workInProgress tree is flushed to the DOM and the pointer on the Root is swapped so that this becomes the new current.

Keeping that in mind, React updates are split into two phases, the render phase and the commit phase. The render includes:

render()shouldComponentUpdategetDerivedStateFromProps- and component lifecycle methods which have since become deprecated in v16 (

UNSAFE_...etc).

It is important to know that the work in the render phase may be performed asynchronously. In contrast, the commit phase is always performed synchronously and therefore may contain side-effects and touch the DOM. (Hint: This is why some methods (prefixed with UNSAFE_) in the render phase have become deprecated, as they were being used “incorrectly” to perform side effects or interact with the DOM).

The methods in the commit phase are:

getSnapshotBeforeUpdatecomponentDidMountcomponentDidUpdatecomponentWillUnmount

Therefore, the render phase builds the workInProgress tree and the effects list; while the commit phase traverses it and runs these effects. If you want to read more, I highly recommend Max Koretskyi’s Inside Fiber: in-depth overview of the new reconciliation algorithm in React.

What about our measures?

OK…that was a shallow dive into a fraction of React’s internal workings; but where does this fit in with our performance measurements? If you look into the source of ReactFiberWorkLoop you will see that the Profiler timer is wrapped (startProfilerTimer and stopProfilerTimerIfRunningAndRecordDelta) around calls to beginWork and completeWork. These two functions cover the main activities for a fiber and therefore the profiler is excluding any time spent outside of these activities, resulting in a more concise measurement. In contrast, our measure will also include work which is not stictly related to the rendering of the component but also work within the React algorithm.

But the placement of the start/stop timer calls doesn’t explain the inconsistencies. As we have just learnt, the render phase is asynchronous; which means that it will not necessarily call and finish executing your render method before moving onto the next one. In fact, React renders components in a sort of “depth-first” traversal; whereby it completes the work of a parent only once all its children have completed their work. In our example, this means that our performance.mark(`${id}:Render:Start`) will not be triggered synchronously and also useEffect is unlikely to come immediately after the completion of the render() method as this occurs at the start of the commit phase.

What’s next?

To resolve the issue with useEffect, according to the React docs we can use useLayoutEffect, which fires synchronously after all DOM mutations. Updating our above code (Code Sandbox) to use useLayoutEffect gives us measurements which seem consistent (albeit marginally larger than the Profiler). Placing this code in a reusable component leaves us with:

MeasureRender.jsx

import { useLayoutEffect } from "react";

export default function MeasureRender({ id, children }) {

useLayoutEffect(() => {

performance.mark(`${id}:Render:End`);

performance.measure(

`${id}:Render`,

`${id}:Render:Start`,

`${id}:Render:End`

);

console.log(

`${id}:Render`,

performance.getEntriesByName(`${id}:Render`)[

performance.getEntriesByName(`${id}:Render`).length - 1

].duration

);

});

performance.mark(`${id}:Render:Start`);

return children;

}

One small alteration which we may want to include, is that the useLayoutEffect will run every time the component mounts or updates. In most cases, the first render measure will differ from the following renders. Subsequent renders take advantage of optimisations in the React reconciliation algorithm, such as memoization; while often, if the component needs to fetch data, the first render does not include the data as this is fetched in the following useEffect. We may thereore want to eliminate first-render from our component. This could be done by updating our MeasureRender to use the useRef hook.

Primarily, useRef is used to store references to the DOM, but it’s more than that and can be used as a mutable object that persists across re-renderings, similarly to setState. However, differently to setState, useRef does not trigger a re-render whenever its value is changed. This results in the following:

MeasureRender.jsx

import { useLayoutEffect, useRef } from "react";

export default function MeasureRender({ id, children }) {

const isMountedRef = useRef(false);

useLayoutEffect(() => {

if (isMountedRef.current) {

performance.mark(`${id}:Update:End`);

performance.measure(

`${id}:Update`,

`${id}:Update:Start`,

`${id}:Update:End`

);

console.log(

`${id}:Update`,

performance.getEntriesByName(`${id}:Update`)[

performance.getEntriesByName(`${id}:Update`).length - 1

].duration

);

} else {

isMountedRef.current = true;

}

});

if (isMountedRef.current) {

performance.mark(`${id}:Update:Start`);

}

return children;

}

In the snippet above, you may notice that we added isMountedRef which is default to false. This will be used to tell us whether our component has already been mounted or not. Therefore inside useLayoutEffect we set it to true if previously it was false. This value will persist inside isMountedRef.current and therefore we will no longer skew our metrics with mount.

Asynchronous rendering…

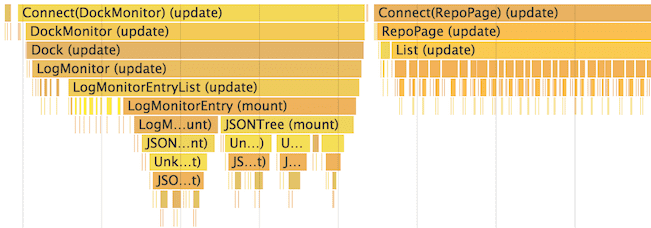

How React is rendering components can be easily illustrated by having two or more instances of the component you want to measure. If you place a logpoint on each performance.mark(`${id}:Render:Start`) you will notice that it will create the Start marks for each component before it reaches the first End mark. You would also notice that the components which are placed first in the tree will have a longer render time compared to their siblings placed at the end of the tree. This is because of the “depth-first” traversal.

React Async Rendering Example

In the example above, assuming a prop which is passed down from App to MyComponentA and MyComponentB has changed, during the render phase, React will call beginWork on App, followed by MyComponentA, MyComponentB and finally MyComponentC. In our MeasureRender component, this means that performance.mark(`${id}:Render:Start`) would be called in that sequence too. On the contrary, completeWork will be called in this order: MyComponentA (no children), MyComponentC, MyComponentB (waiting for MyComponentC to call completeWork) and finally App (which was waiting for MyComponentA and MyComponentB to call completeWork). As a result, our measurements for each component would vary greatly, even if they do the same amount of work as the performance.mark(`${id}:Render:Start`) is called in sequence, while the performance.mark(`${id}:Render:End`) is called at the start of the commit phase, which would happen after the render() of all components. To get a meaningful measurement, we would place the MeasureRender within the App component (in this example) where the prop change originates.

I have also implemented an class component equivalent using the static method getDerivedStateFromProps() which is called right before the render method and getSnapshotBeforeUpdate() (GitHub issue); which is “invoked right before the most recently rendered output is committed”. The results are slightly more accurate, mostly due to getSnapshotBeforeUpdate() being called earlier (the first function being called in commitRoot) than the useLayoutEffect hook.

MeasureRenderClass.jsx:

import React from "react";

const supportsUserTiming =

typeof performance !== "undefined" &&

typeof performance.mark === "function" &&

typeof performance.clearMarks === "function" &&

typeof performance.measure === "function" &&

typeof performance.clearMeasures === "function";

export default class MeasureRender extends React.PureComponent {

constructor(props) {

super(props);

this.state = {};

if (typeof props.on === "undefined") {

console.warn(

"Please specify an `on` prop to listen to prop changes for the prop you would like to measure."

);

}

}

componentDidUpdate() {

performance.measure(

`${this.props.id}:Update`,

`${this.props.id}:Update:Start`,

`${this.props.id}:Update:End`

);

console.log(

`${this.props.id}:Update`,

performance.getEntriesByName(`${this.props.id}:Update`)[

performance.getEntriesByName(`${this.props.id}:Update`).length - 1

].duration

);

}

// getSnapshotBeforeUpdate() is invoked right before the most recently rendered output is committed

getSnapshotBeforeUpdate() {

performance.mark(`${this.props.id}:Update:End`);

return null;

}

// getDerivedStateFromProps is invoked right before calling the render method

static getDerivedStateFromProps(props) {

if (supportsUserTiming) {

performance.mark(`${props.id}:Update:Start`);

}

return null;

}

render() {

return null;

}

}

Note that we do not return the children any longer as we felt that this was confusing and made it seem like we are only measuring the render time of the children, when we are measuring the render time of all components which update as a result of our prop change.

OK, it works!

Fortunately (!), this was quite a bumpy ride; as a result of which I have better understood the React reconciliation algorithm. Testing this performs as expected, with very little margin of error when compared to the React Profiler and always returning consistent results.

To rAF or not to rAF?

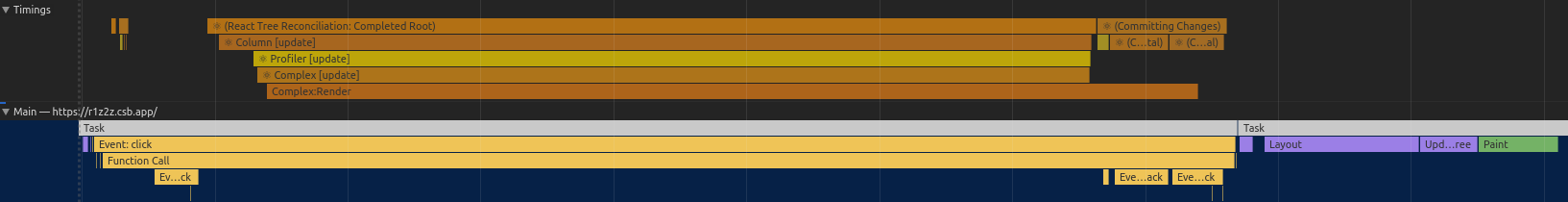

When measuring the performance of JavaScript functions which update the DOM, we might want to include time spent on layout and paint, denoted by the purple and green bars on Chrome DevTools’s performance tab.

(Note: These are not included in React Profiler’s onRender callback.)

Excluding Layout and Paint in Chrome DevTools

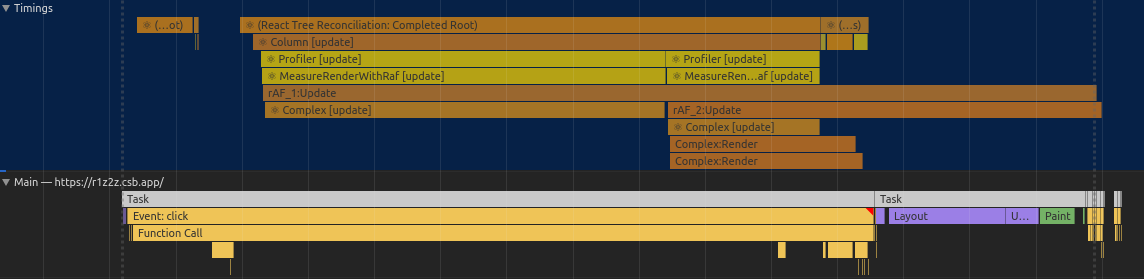

The widely accepted solution is to use a combination of requestAnimationFrame and setTimeout. According to the HTML5 spec, requestAnimationFrame fires before style and layout are calculated (although on Safari it fires after 🙄). We therefore include setTimeout to take us to the end of the event queue, which will occur after style, layout & paint.

rAF + setTimeout

As shown in the figure above, we are now including the style, layout & paint in our User Timing measurements. Hooray? Not quite. As we already know, React flushes all changes to the DOM at one go? This means that if we have an interaction which updates the DOM in two distinct components, the two measures will start at the same time but end together, including the render, layout and paint time of both components together. In our case we opted against using rAF + setTimeout and only exclude layout & paint, but I will look into this further over the coming weeks.

Conclusion

Whoa, was that was more than I bargained for! But as a result, I definitely understand the React reconciliation algorithm better than I did at the start of writing this component and I can apply this knowledge beyond performance measures.

What about usage of the component itself; do I use it? I have been using this component on the production environment for a handful of applications and I am monitoring its behaviour to assess its reliability. So far it has proven satisfactory as I am very familiar with the rest of the codebase and I know exactly what I am measuring. Would I recommend you to use it? Probably not. As I learnt the hard way, it is important to understand what you are measuring and to confirm that you are measuring correctly by comparing this against a trusthworty standard (the React Profiler in my case). If you take the time to try and implement your own measures, I am optimistic that you will understand your code’s performance and reconciliation in greater detail than by applying the onRender callback; as an incorrect implementation will result in incorrect measurements and understanding why the measures differ may take you down roads you haven’t explored before.

That’s all for today. Please reach out to me on Twitter with any feedback or suggestions.

Check out the demo!

Latest Updates

- On writingThu Jun 26 2025

- Adding a CrUX Vis shortcut to Chrome's address barTue Apr 15 2025

- Contributing to the Web Almanac 2024 Performance chapterMon Nov 25 2024

- Improving Largest Contentful Paint on slower devicesSat Mar 09 2024

- Devfest 2023, MaltaWed Dec 06 2023